Predictive Analytics in Real Estate

The power of AI-driven predictive analytics has much to offer to real estate. Let's see how this sector can get a jump on an uncertain market through AI.

The real estate sector accounts for a significant slice of all the wealth on the planet, and of the power that accompanies that wealth. Though the global property market is calculated to be the third largest world industry after insurance provision and pension funds1, the value of all existing land and property was estimated in 2016 at US$217 trillion, with residential property accounting for 75% of that2. The figure has risen since, while the global pandemic and the move away from cities has only fueled the real estate sector further3,4.

The numerous markets and sub-markets that grew up in tandem around this huge locus of money have been seeking certainty and consolidation through technology for centuries, and were vanguard adopters of new forecasting methodologies5, construction techniques6 and rent-enabling technologies7,8.

However, the sector has recently become more conservative, as the pace of innovation has quickened9, with a smaller and more adventurous tier of organizations turning to AI software development to formulate predictive analytics solutions capable of addressing the new challenges of the market.

In this article we’ll take a look at current implementations of AI in real estate when it comes to predictive analytics and at some of the possibilities that new research might offer for the sector.

with Iflexion’s technology consultants.

PropTech: The Growth of Real Estate Technologies

Between 1954 and 2003, the real estate tech sector was relatively stable. Traditional metrics and statistical models provided comforting continuity for the market, and innovation was relatively rare, limited to the periodic availability of new or improved metrics, or to the generalized digitization of the market after the millennium10.

As machine learning technologies grew in capability throughout the early 2000s, related growth in property technologies (PropTech) became noticeable; but after the advent of GPU-accelerated machine learning techniques around 2010, the level of commitment deepened considerably:

Since 2015, investment in real estate technologies has seen further credible growth11, though hindered in early 2020 by the emergence of COVID-19.

Startup funding quadrupled in the four years following 2015, representing US$8.9 billion of investment covering over 500 deals in the year prior to the pandemic, with deal activity increased by 61% in that period.

One survey from 2020 reports that nearly 66% of respondents adopted new PropTech approaches in 2019, while 90% anticipate a continuing upward trend in this sector12. In a 2017 report, 65% of the leading real estate executives surveyed were likely to prioritize predictive analytics over other emerging technologies13.

AI-Based Predictive Analytics in Action

It took 3-4 years after the advent of GPU-accelerated machine learning (in 2010-11) for a slate of new AI-enabled companies and platforms to make an impact on the real estate market, while a number of older names in traditional real estate analytics have pivoted to also adopt predictive analytics:

- One Californian real estate company uses AI-powered predictive analytics to traverse the high volumes of data that issue from real estate agents, using advanced analytics to understand which leads concluded in a successful sale14.

- Another startup out of California uses image recognition to evaluate damage from smoke, water, and other attritional factors that may affect the retail or rental value of a property15.

- Launched in 2014, Opendoor is a digital platform for home purchasing and selling, and uses the merged architecture of diverse machine learning real estate valuation models to arrive at better estimates of what a property is worth16 (a technique known as ensembling17).

- Property platform Mashvisor uses machine learning-assisted predictive analytics to provide tools to help clients locate ideal investment properties, choose the best location for development, and evaluate optimal rental strategies18.

- Enodo Score uses AI-driven predictive analytics to evaluate whether upgrades and improvements to an apartment or house would be rewarded with commensurate rises in property values19.

- San Francisco-based Spacequant uses data from operating statements and rent rolls to develop machine learning analytics to facilitate loan origination and to provide real-time data on commercial real estate performance, among other services20.

- Besides a wide portfolio of AI real estate services and a database of 110 million US properties, Zillow hosts a tech hub and research group dedicated to new applications of AI to the real estate market. The company operates its Zestimate valuation tool over Amazon Kinesis and Apache Spark running on Amazon EMR21.

Tweet

Tweet

A New Era of Predictive Analytics

Predictive analytics maps hypothetical relationships between past trends and future events in order to produce actionable insights. Though the principle dates back several millennia, it has been disrupted in the last two decades by a variety of factors, including the digital revolution, the advent of big data, the advance of IoT, and the data analysis capabilities of the latest generation of machine learning systems.

Using Supervised Machine Learning to Retrench Older Analytics Models

The initial response to the higher capabilities of machine learning systems was to improve calculation and accuracy times for traditional predictive theories — theories that were designed within the prior limitations of available data sources (such as actuarial tables and planning records), as well as within the parameters of the more limited hardware and software capabilities of previous eras.

Under these older predictive models, data sources would be labelled and processed in accordance with the tenets of the governing theory, and all other data dismissed as outlier anomalies. By analogy, one could compare this to designing an 'electric horse' in 1886 instead of the combustion engine.

Data Relationship Discovery Through Unsupervised Machine Learning

Arguably, the real value of AI and big data in real estate lies elsewhere. Unsupervised machine learning approaches are capable of identifying and mapping new relationships between a gamut of data points far in advance of any that were available under earlier systems22.

This requires a measure of courage, since unsupervised machine learning may exploit data that has never been available (or accessible) before, or invent mapping dynamics that are unprecedented and non-transferable to previous models — and to the historical statistical data that they produced.

This undermines the continuity of forecasting systems that may date back decades (or even centuries) in favor of new relationship-mappings that are capable of far greater accuracy but have not yet achieved historical maturity or general market confidence23,24.

Using AI to Assess Local Property Values

Besides obvious factors such as space, age, local crime statistics, the state of the economy and school access, some very abstract data can come into play when estimating the current value of real estate.

These include the presence of trees, how frequently earthquakes occur, pandemics, proximity of coffee shops and grocery stores, and the qualities of online reviews for the area. Also, traditional automated valuation models can fall short in areas where houses are traded infrequently, such as Korea25. In such cases an area's property value index may be calculated based on more recent data from similar or adjacent locations.

However, such 'translocation' of data can be problematic where the current prevailing conditions are different — for instance, after the advent of a global pandemic that has affected the traditional model of centralized cities26.

Harnessing Strange Variables for Property Valuation

According to McKinsey, nearly 60% of the predictive power of AI-driven real estate analytics comes from non-traditional variables:

Taking these new data sources into account involves some sacrifice, because it's impossible to maintain decades of statistical continuity when novel sources come along and disrupt the variables. Nonetheless, this bold approach yields deeper and non-obvious insights into factors affecting rental or sale valuation.

Integrating Regional, National and Global Data

Variables for machine learning datasets related to real estate may benefit from a wider view of economics and world affairs. Since there are subtle but essential relationships between credit, gross domestic product (GDP) and real estate markets27, a myopic overview of historical local property values is likely to miss international trends that should be considered when evaluating property prices.

For instance, the conditions that underpinned the financial crisis of 2008 (increased lending and high loan-to-value mortgage agreements) inevitably powered forecasts in the US for rising property values under traditional analytical models.

This is because demand was continuing to outstrip availability, barriers to entry were low, and the historical variables for the likelihood of a new property slump were too couched in historical context for the modeling parameters that were in use at the time28. The subsequent crash revealed the shortcomings and limited focus both of the data and the forecasting methodologies.

Likewise, the effect that the pandemic has had on global property values has brought into focus the need for machine learning frameworks to account for historically absent factors that sit far outside the traditional data-gathering scope — but without defaulting to pessimistic predictions that fail to account for 'local resilience' in a real estate market.

Controlling Statistical Wildfires

If a machine learning system does not have access to factors that will influence a real estate analytics model, real-life people are likely to fill in the gap, confounding the model with unanticipated market behavior.

For instance, after the property crash of 2008, individual real estate markets in the UK reacted proactively and independently based on a number of imponderable factors, such as emerging news of the crisis from the US29.

This meant that one neighborhood, or even one street, would suddenly have several houses for sale, lowering local property values in defiance of a more robust national market, and, inevitably, contributing to a wider spread of property devaluation.

A well-designed predictive analytics model needs the ability to identify and ring-fence sporadic local events of this nature, rather than treating them as coalmine canaries that augur wider market disruption (and thereby act out a self-fulfilling prophecy).

Using Predictive Analytics for Location Planning

As we've seen, proximity to major coffee chain outlets is a new and interesting factor affecting real estate valuation; but the larger-scale operators themselves also use predictive analytics via machine learning to identify areas where they should open new stores:

A predictive analytics system with this remit has to consider a wide variety of factors30:

- Demographic data by segment and area code

- Local property price index

- Store level and size appropriate for the locality

- Local culture

- Crime rate and type

- Established competitors

- Planning applications for competitors

- Parking availability

- Local transport network

- Local purchasing power

- Cost-effectiveness of area rents

Starbucks, which operates over 32,600 stores worldwide31, uses a commercial mapping and business intelligence service32 in order to ascertain the best locations for new outlets. The framework collates and interprets a high volume of statistical data for the location and even considers whether a new outlet would be compromised by the opening of a competing chain in the same area (this strictly mathematical rationale also explains why popular chains sometimes run outlets within eye-sight of each other33).

The framework also helps in determining which locations should offer 'Starbucks evenings', occasions where alcohol is sold in store — another case where it’s necessary to evaluate local demographics, among other factors.

Tweet

Tweet

New Research in Real Estate Predictive Analytics

Ongoing progress in predictive analytics for real estate depends on new innovations, models and approaches, offering novel ways to transform big data and public-facing resources into fruitful machine learning analytics algorithms.

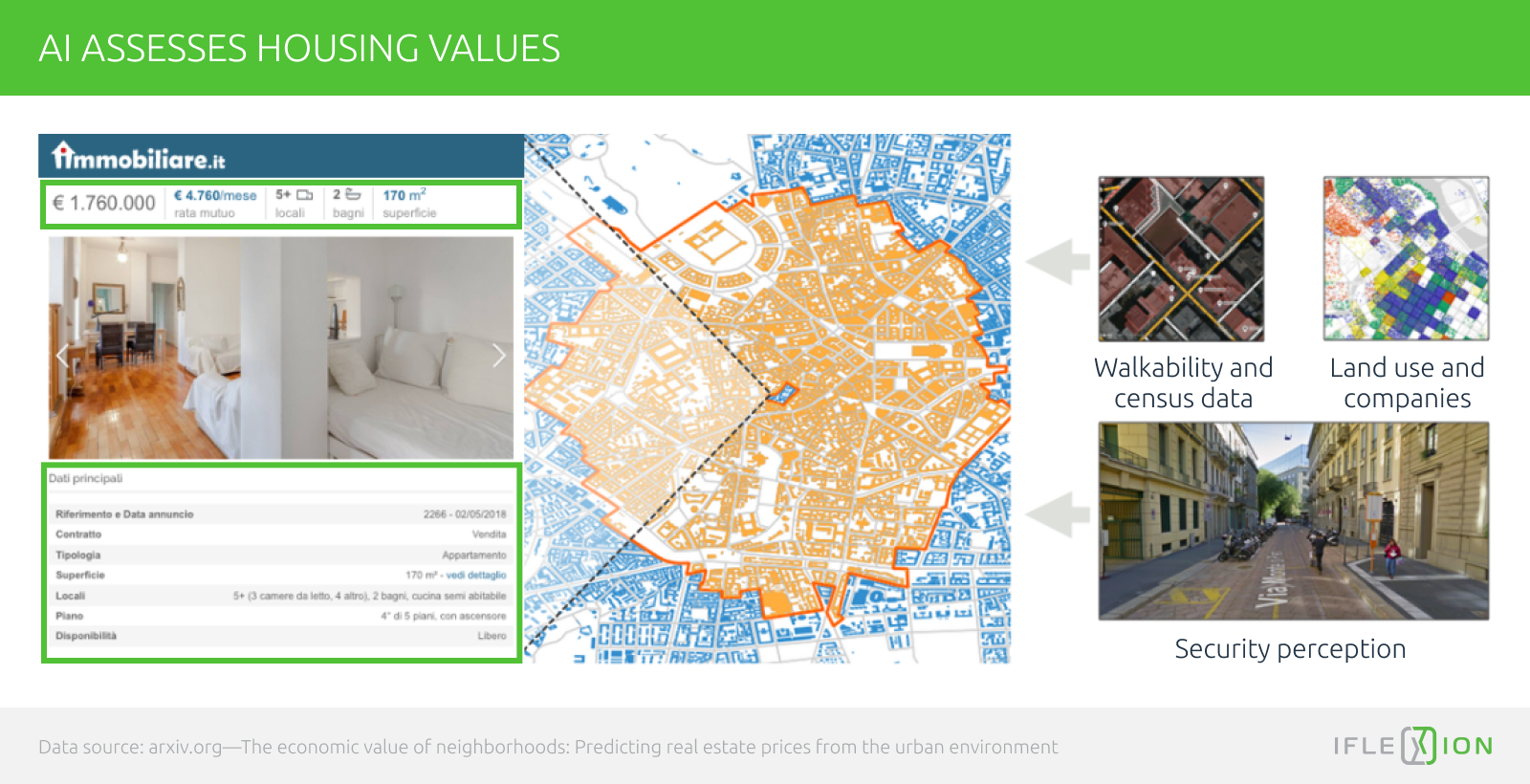

Assessing The Impact of Neighborhood Characteristics On Housing Values

Italian researchers trained a gradient-boosting algorithm on eight cities in Italy, analyzing 70,000 housing listings to discover how the visible and perceptual characteristics of a neighborhood influence property valuation34:

One of the challenges of the project is defining boundaries for an urban neighborhood, and assessing a catchment area where one particular set of characteristics predominates. To this purpose the project, which is openly available on GitHub35, defines egohoods — census-driven blocks that may overlap different neighborhoods.

'Fake News' in The Real Estate Market

A 2018 research project from Spain36 highlights the difficulty in pre-processing data for the real estate market, particularly when using advertised listings as a data stream. The project applies four different machine learning techniques (support vector regression; k-nearest neighbors; ensembles of regression trees and multi-layer perceptron) against a variety of data sources similar in scope to the above-mentioned Italian research.

Though ensembles of regression trees performed best in the survey, the researchers note that the model still produces high mean and median error rates, and suggest that the use of property listings can result in 'mislabeled data' when a house or apartment goes on sale at a price that isn't realistic for the market.

Though it would be very useful to have correlating information regarding a property's actual sold price vs. its listing price, this is rarely public-facing data, and in any case would be difficult to automate into a data stream.

It would also be useful to track a property listing and observe how long it remains 'for sale', and the frequency and variance of any change in the price over the course of the listing, since these factors are potential indicators of an unrealistic initial valuation and would benefit a predictive analytics model.

However, not only is this a notable logistical challenge, but it would be possible to misinterpret the removal of the property from listings as a 'sale' at the stated price (where in fact the price had been privately negotiated down) or as a 'withdrawal' from the market, i.e. a failed sale, because the data stream only monitors digital listings and does not have an image recognition camera trained on the property looking for a new 'SOLD' sign.

Conclusion

It seems likely that the continuing growth and adoption of predictive analytics in real estate will depend on several factors:

- An end to the uncertainty around AI regulation, ensuring that new and fruitful data architectures for predictive analytics do not depend on sources that are imperiled by pending government legislation.

- The emergence of a definitive market leader — a corporation or start-up with a clear product or service, an unassailable business model for the sector, and a FAANG-style taste for publicity.

- Time — because some of the most promising new predictive analytics frameworks have not been in existence long enough to prove their value over the long term.

- The willingness of incumbent market leaders to develop AI-driven systems that at least run in parallel to their older analytics models, while their worth is evaluated.

- A hard break from the need to build predictive analytics systems from the ground up, as methodologies for data-gathering gradually become commoditized into SaaS offerings that can more easily be configured into agile frameworks.

- A clearer taxonomy for the value of contributing data streams, including computer vision-based sources for interior and exterior evaluation and interpretation; well-defined data pre-processing pipelines for public-facing commercial or governmental resources (such as planning applications); and clear evidence that these streams correlate to real-world conditions within an acceptable margin of error.

However, those who wait for all these certainties may end up customers rather than providers. As one Oxford university professor recently noted in Pi Lab’s paper on PropTech cited above:

'We should remember Amara’s Law: we tend to overestimate the impact of a new technology in the short run, but we underestimate it in the long run. Sooner or later, the long run will arrive.'

Tweet

Tweet

Book your first consultation now.

-

![]()

The Global Impact of Big Data on Real Estate

Learn how real estate companies can leverage the power of big data to enhance decision making, improve customer engagement, and streamline engineering and construction.

FULL ARTICLEUpdated: September 07, 2022Published: October 07, 2020By Yaroslav Kuflinski -

![]()

6 Ways AI Is Reshaping the Commercial Real Estate Industry

We look at the real-life examples of artificial intelligence applications in the real estate industry and suggest what to keep an eye on in the nearest future.

FULL ARTICLEUpdated: May 14, 2022Published: February 27, 2020By Yaroslav Kuflinski -

![]()

AI in the Supply Chain Management: Where are We Now?

Here's a look at how machine learning is impacting supply chain management and logistics, and how the relationship might develop in the next few years.

FULL ARTICLEUpdated: May 14, 2022Published: February 12, 2021By Martin Anderson -

![]()

AI in Architecture: Is It a Good Match?

In this overview of AI in architecture, we'll look at emerging tools, systems and ideas related to AI in architecture — as well as some of the obstacles to automation.

FULL ARTICLEUpdated: May 14, 2022Published: January 18, 2021By Martin Anderson -

![]()

AI in Predictive Maintenance Software: How It Works

Machine learning offers strategies to cut downtime and extend component life through predictive maintenance forecasting. Let's take a look at some of the possible solutions for the challenges there.

FULL ARTICLEUpdated: September 26, 2022Published: December 16, 2020By Martin Anderson -

![]()

Will Data Science in Healthcare Overcome Its Many Roadblocks?

Data science now has an unprecedented opportunity to leverage machine learning and transform healthcare. Here's a look at how it can face up to some of its biggest challenges in the post-COVID era.

FULL ARTICLEUpdated: August 05, 2022Published: December 04, 2020By Martin Anderson

WANT TO START A PROJECT?

It’s simple!

Sources (36)

Sources (36)